John Mueller provided some good recommendations on the effectiveness of deleting unindexed pages in case of an error: “Discovered Currently Not Indexed”.

The presence of pages that are not bypassed by search robots can become a significant problem even for large projects. At the same time, there is no clear opinion among webmasters about the effectiveness of their banal removal.

John reveals some details of the work of automated algorithms responsible for indexing content.

Recommendation of the month: Netology – educational platform for teaching modern sought-after professions in the areas of: Marketing, Business and Management, Design and UX, Programming, Analytics and Data Science, EdTech, B2B. Over 10 years of work, they have graduated more than 60,000 specialists.

- Discovered – Currently Not Indexed

- Deleting non-indexed pages

- Will compacting the information help?

- Potential causes of indexing problems

- Server power

- Overall site quality

- Site optimization for upcoming indexing

- Main menu

- Internal linking

- Thin (weak) content

- The impact of the “Discovered –Currently Not Indexed” problem on online Sales

Discovered–Currently Not Indexed

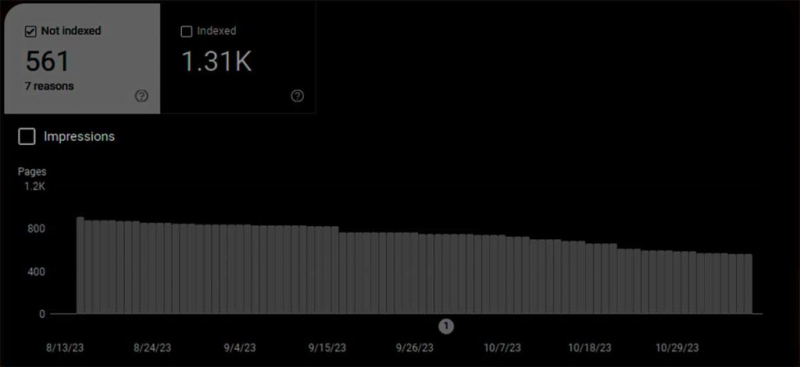

To track problems that arise on the site pages and related to the level of visibility in organic search results, the Google search engine has a specialized tool – Search Console.

The indexing status is one of the most significant indicators in the reports of the tool. Because it reflects the current situation: how many pages are in the index and brings traffic, and which part just lies in the database of the site and does not affect your visibility in the search.

In most cases, every Search Console message that an unindexed page has been found is a serious reason to take emergency measures. But there may be many reasons why the page is not crawled by search bots. And this despite the fact that the official Google documentation includes only one:

The page has been scanned, but not indexed yet

This message means that we have scanned the page, but have not yet added it to the Google index. In the future, it may be indexed, or it may remain in its current state; you do not need to send a request to scan this URL again.

Therefore, the report on the last scan contains an empty column with the date. Google discovered the insufficient capacity of the selected server and postponed work with the site for a while, giving you the opportunity to work on the availability of the resource.

But Mueller is not limited to such a one-sided view of the problems that arise:

Deleting non-indexed pages

The theory that deleting some pages can lead to an improvement in the site’s overall scanning performance has become widespread in narrow professional circles of webmasters.

Many experienced specialists will certainly remember about the so-called crowling budget, which assumes a limited amount of scanning when a bot visits the target site once. In other words, only a certain number of pages will be indexed at a time. But if you have uploaded several hundred materials in a week, then they may well get into the output with a delay, the duration of which will depend on the frequency of scanning.

Google’s official position on this issue is unambiguous – there is no crowling budget, as a concept, for a search engine. The volume of scanned pages depends only on the technical capabilities of the server and a number of internal parameters that are not disclosed.

In addition, Google is not able to save absolutely every page of the Global Network. This is too much information. Therefore, automated algorithms have to be legible and choose only the materials that are suitable for this. As a rule, pages with a certain value for users are indexed.

Will compacting the information help?

This is very relevant for large online stores and marketplaces. If you create a separate product card for each of its specifications, the result is a lot of clones with almost identical descriptions. If you combine them into one card with the ability to switch various parameters, such as, for example: color, size, style, and the like, then the number of pages requiring indexing will be significantly reduced.

John Mueller said that there is no categorical answer to such a question. At the same time, he referred to some general recommendations:

Compaction of the site pages does not in itself lead to an improvement in its indexing. Here it is important to say exactly how it affects the quality of published information. Ifthe quality increases, which means that compaction helps to improve the impression of search bots, which means it has a beneficial effect on the indexing speed.

That is why developers are advised to focus on the quality of the information provided, and not on individual parameters that can affect the work of bots during scanning. Reducing the number of pages by itself does not lead to an improvement in the search situation, but only reduces the visibility of the site.

Potential causes of indexing problems

The reason that Google found the page, but refused to index it, may be:

- Server power.

- The overall quality of the site.

Server capacity

The number of requests that the leased server is able to handle directly affects the speed of site scanning. Search bots will not download the entire channel, causing interruptions in the availability of published content.

Accordingly, more attention is paid to large resources and several bots are engaged in scanning them at once, which means that the number of simultaneous requests increases significantly.

It should also be remembered that Google is not a monopolist. This means that bots of various search engines, such as Microsoft, Apple and others, can simultaneously scan. Hundreds or even thousands of bots simultaneously visiting a large site can have a significant impact on the server’s ability to process incoming requests.

Regularly check the log of your own server and monitor peak loads to understand whether the current capacity is sufficient to ensure uninterrupted access to published materials.

Overall site quality

A more specialized reason for the potential under-indexing of the site. Google gives out a certain rating to all sites that claim to be included in the index. If you want to collect organic traffic, you should pay close attention to this parameter.

John Muller said that even a single low-quality section of the site is able to significantly reduce the overall indicator of its quality:

Assessing the quality of the site as a whole, Google experts pay attention to significant parts of low-quality content that may affect the overall assessment. Because the search engine, as a rule, does not care about the reasons why some of the materials turned out to be low-grade. At the same time, the quality indicator is influenced not only by the content of the articles, but also by parameters such as the layout structure and overall design.

Muller also said that the definition of quality is a long-term process that can take months. Because technically complex highly specialized topics require a high level of expertise not only from site owners, but also from employees who conduct the analysis.

Website optimization for the upcoming indexing

Commercial projects require close attention to every element of the page, so search engines are extremely picky about the little things in the indexing process.

Main menu

All the most important sections of the site should be displayed in the main menu. This allows you to improve the usability and quality of the user experience. If we consider more exotic cases, then the main menu may contain links to the key materials of the resource.

Internal linking

Do not forget to link the pages of the site to each other through internal linking. Use links to various materials that are thematically appropriate in context. Thus, users will immediately receive all the necessary information and will not return to the search to enter clarifying queries.

Thin (weak) content

At its core, these are pages that contain a minimum amount of useful information. Today it is not enough just to fill them with the minimum amount of necessary data. If this is a page with contact details, fill it in with short previews of department employees who will respond to visitors’ requests. Expand the thin content, but do not allow yourself to clutter it with useless and uninformative blocks of text.

The impact of the “Discovered–Currently Not Indexed” problem on Online Sales

It’s not enough just to put the goods on the shelves in anticipation of an incredible influx of customers. Modern business requires a full-fledged staff of experienced consultants who are able to promote your offers to all categories of potential buyers.

Internet resources work on the same principle. An experienced webmaster is able to design a page in such a way that the search engine wants to index it.

By filling a resource with value for users, you not only promote it in organic output, but also help your own target audience better satisfy the intent.